Jacobian matrix and determinant

From Wikipedia, the free encyclopedia

(Redirected from Jacobian matrix)

| Topics in calculus |

|---|

and

and  . If (x1,...,xn) are the usual orthogonal Cartesian coordinates, the i th row (i = 1, ..., m) of this matrix corresponds to the gradient of the ith component function Fi:

. If (x1,...,xn) are the usual orthogonal Cartesian coordinates, the i th row (i = 1, ..., m) of this matrix corresponds to the gradient of the ith component function Fi:  . Note that some books define the Jacobian as the transpose of the matrix given above.

. Note that some books define the Jacobian as the transpose of the matrix given above.The Jacobian determinant (often simply called the Jacobian) is the determinant of the Jacobian matrix (if

).

).These concepts are named after the mathematician Carl Gustav Jacob Jacobi.

Contents |

Jacobian matrix

The Jacobian of a function describes the orientation of a tangent plane to the function at a given point. In this way, the Jacobian generalizes the gradient of a scalar valued function of multiple variables which itself generalizes the derivative of a scalar-valued function of a scalar. Likewise, the Jacobian can also be thought of as describing the amount of "stretching" that a transformation imposes. For example, if is used to transform an image, the Jacobian of f,

is used to transform an image, the Jacobian of f,  describes how much the image in the neighborhood of

describes how much the image in the neighborhood of  is stretched in the x and y directions.

is stretched in the x and y directions.If a function is differentiable at a point, its derivative is given in coordinates by the Jacobian, but a function doesn't need to be differentiable for the Jacobian to be defined, since only the partial derivatives are required to exist.

The importance of the Jacobian lies in the fact that it represents the best linear approximation to a differentiable function near a given point. In this sense, the Jacobian is the derivative of a multivariate function.

If p is a point in Rn and F is differentiable at p, then its derivative is given by JF(p). In this case, the linear map described by JF(p) is the best linear approximation of F near the point p, in the sense that

) and

) and  is the distance between x and p.

is the distance between x and p.In a sense, both the gradient and Jacobian are "first derivatives" — the former the first derivative of a scalar function of several variables, the latter the first derivative of a vector function of several variables. In general, the gradient can be regarded as a special version of the Jacobian: it is the Jacobian of a scalar function of several variables.

The Jacobian of the gradient has a special name: the Hessian matrix, which in a sense is the "second derivative" of the scalar function of several variables in question.

Inverse

According to the inverse function theorem, the matrix inverse of the Jacobian matrix of an invertible function is the Jacobian matrix of the inverse function. That is, for some function F : Rn → Rn and a point p in Rn,Uses

Dynamical systems

Consider a dynamical system of the form x' = F(x), where x' is the (component-wise) time derivative of x, and F : Rn → Rn is continuous and differentiable. If F(x0) = 0, then x0 is a stationary point (also called a critical point, not to be confused with a fixed point). The behavior of the system near a stationary point is related to the eigenvalues of JF(x0), the Jacobian of F at the stationary point.[1] Specifically, if the eigenvalues all have a negative real part, then the system is stable in the operating point, if any eigenvalue has a positive real part, then the point is unstable.Image Jacobian

In computer vision the image jacobian is known as the relationship between the movement of the camera and the apparent motion of the image (Optical Flow).A point in the space with 3D coordinates

in the frame of the camera, is projected in the image as a 2D point with coordinates

in the frame of the camera, is projected in the image as a 2D point with coordinates  , the relation between them is (neglecting the intrinsic parameters of the camera, and assuming focal distance 1) :

, the relation between them is (neglecting the intrinsic parameters of the camera, and assuming focal distance 1) :

Deriving this

Grouping these equations

Finally for a given pixel in the image with coordinates :

the apparent motion[2] :

the apparent motion[2] :

Newton's method

A system of coupled nonlinear equations can be solved iteratively by Newton's method. This method uses the Jacobian matrix of the system of equations.The following is the detail code in MATLAB (although there is a built in 'jacobian' command)

function s = jacobian(f, x, tol)

% f is a multivariable function handle, x is a starting point

if nargin == 2

tol = 10^(-5);

end

while 1

% if x and f(x) are row vectors, we need transpose operations here

y = x' - jacob(f, x)\f(x)'; % get the next point

if norm(f(y))

function j = jacob(f, x) % approximately calculate Jacobian matrix

k = length(x);

j = zeros(k, k);

for m = 1: k

x2 = x;

x2(m) =x(m)+0.001;

j(m, :) = 1000*(f(x2)-f(x)); % partial derivatives in m-th row

end

Jacobian determinant

If m = n, then F is a function from n-space to n-space and the Jacobian matrix is a square matrix. We can then form its determinant, known as the Jacobian determinant. The Jacobian determinant is sometimes simply called "the Jacobian."

The Jacobian determinant at a given point gives important information about the behavior of F near that point. For instance, the continuously differentiable function F is invertible near a point p ∈ Rn if the Jacobian determinant at p is non-zero. This is the inverse function theorem. Furthermore, if the Jacobian determinant at p is positive, then F preserves orientation near p; if it is negative, F reverses orientation. The absolute value of the Jacobian determinant at p gives us the factor by which the function F expands or shrinks volumes near p; this is why it occurs in the general substitution rule.

Uses

The Jacobian determinant is used when making a change of variables when evaluating a multiple integral

of a function over a region within its domain. To accommodate for the

change of coordinates the magnitude of the Jacobian determinant arises

as a multiplicative factor within the integral. Normally it is required

that the change of coordinates be done in a manner which maintains an injectivity

between the coordinates that determine the domain. The Jacobian

determinant, as a result, is usually well defined. The Jacobian can also

be used to solve systems of differential equations at an equilibrium point or approximate solutions near an equilibrium point.

Examples

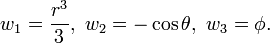

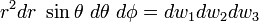

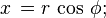

Example 1. The transformation from spherical coordinates (r, θ, φ) to Cartesian coordinates (x1, x2, x3), is given by the function F : R+ × [0,π] × [0,2π) → R3 with components:

The Jacobian matrix for this coordinate change is

![J_F(r,\theta,\phi) =\begin{bmatrix}

\dfrac{\partial x_1}{\partial r} & \dfrac{\partial x_1}{\partial \theta} & \dfrac{\partial x_1}{\partial \phi} \\[3pt]

\dfrac{\partial x_2}{\partial r} & \dfrac{\partial x_2}{\partial \theta} & \dfrac{\partial x_2}{\partial \phi} \\[3pt]

\dfrac{\partial x_3}{\partial r} & \dfrac{\partial x_3}{\partial \theta} & \dfrac{\partial x_3}{\partial \phi} \\

\end{bmatrix}=\begin{bmatrix}

\sin\theta\, \cos\phi & r\, \cos\theta\, \cos\phi & -r\, \sin\theta\, \sin\phi \\

\sin\theta\, \sin\phi & r\, \cos\theta\, \sin\phi & r\, \sin\theta\, \cos\phi \\

\cos\theta & -r\, \sin\theta & 0

\end{bmatrix}.](http://upload.wikimedia.org/wikipedia/en/math/d/7/9/d791528200a6f3c81162837ca9624f39.png)

The determinant is r2 sin θ. As an example, since dV = dx1 dx2 dx3 this determinant implies that the differential volume element dV = r2 sin θ dr dθ dϕ. Nevertheless this determinant varies with coordinates. To avoid any variation the new coordinates can be defined as  [3] Now the determinant equals to 1 and volume element becomes

[3] Now the determinant equals to 1 and volume element becomes  .

.

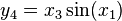

Example 2. The Jacobian matrix of the function F : R3 → R4 with components

is

![J_F(x_1,x_2,x_3) =\begin{bmatrix}

\dfrac{\partial y_1}{\partial x_1} & \dfrac{\partial y_1}{\partial x_2} & \dfrac{\partial y_1}{\partial x_3} \\[3pt]

\dfrac{\partial y_2}{\partial x_1} & \dfrac{\partial y_2}{\partial x_2} & \dfrac{\partial y_2}{\partial x_3} \\[3pt]

\dfrac{\partial y_3}{\partial x_1} & \dfrac{\partial y_3}{\partial x_2} & \dfrac{\partial y_3}{\partial x_3} \\[3pt]

\dfrac{\partial y_4}{\partial x_1} & \dfrac{\partial y_4}{\partial x_2} & \dfrac{\partial y_4}{\partial x_3} \\

\end{bmatrix}=\begin{bmatrix} 1 & 0 & 0 \\ 0 & 0 & 5 \\ 0 & 8x_2 & -2 \\ x_3\cos(x_1) & 0 & \sin(x_1) \end{bmatrix}.](http://upload.wikimedia.org/wikipedia/en/math/c/5/5/c5522bd86cb1150c8d420976c20b69df.png)

This example shows that the Jacobian need not be a square matrix.

Example 3.

The Jacobian determinant is equal to  . This shows how an integral in the Cartesian coordinate system is transformed into an integral in the polar coordinate system:

. This shows how an integral in the Cartesian coordinate system is transformed into an integral in the polar coordinate system:

Example 4. The Jacobian determinant of the function F : R3 → R3 with components

is

From this we see that F reverses orientation near those points where x1 and x2 have the same sign; the function is locally invertible everywhere except near points where x1 = 0 or x2 = 0. Intuitively, if you start with a tiny object around the point (1,1,1) and apply F to that object, you will get an object set with approximately 40 times the volume of the original one.

See also

Notes

- ^ D.K. Arrowsmith and C.M. Place, Dynamical Systems, Section 3.3, Chapman & Hall, London, 1992. ISBN 0-412-39080-9.

- ^ Fermín, Leonardo; Medina et al (2009). "Estimation of Velocities Components using Optical Flow and Inner Product". Lecture Notes in Computer Science 396: 349-358.

- ^ Taken from http://www.sjcrothers.plasmaresources.com/schwarzschild.pdf - On the Gravitational Field of a Mass Point according to Einstein’s Theory by K. Schwarzschild - arXiv:physics/9905030 v1 (text of the original paper, in Wikisource).

External links

- Mathworld A more technical explanation of Jacobians

![J(F^{-1}(p)) = [ J(F(p)) ]^{-1}.\](http://upload.wikimedia.org/wikipedia/en/math/a/0/2/a021c65036f8aa986ca7fe22851693d6.png)

No comments:

Post a Comment

Thank you