| empcop.test {copula} | R Documentation |

Multivariate independence test based on the empirical copula process

Description

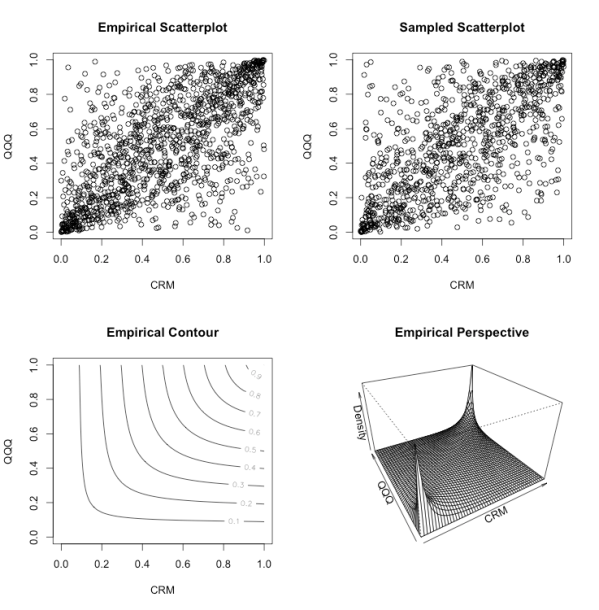

Multivariate independence test based on the empirical copula process as proposed by Christian Genest and Bruno R�millard. The test can be seen as composed of three steps: (i) a simulation step, which consists in simulating the distribution of the test statistics under independence for the sample size under consideration; (ii) the test itself, which consists in computing the approximate p-values of the test statistics with respect to the empirical distributions obtained in step (i); and (iii) the display of a graphic, called a dependogram, enabling to understand the type of departure from independence, if any. More details can be found in the articles cited in the reference section.Usage

empcop.simulate(n, p, m, N = 2000) empcop.test(x, d, m, alpha = 0.05) dependogram(test, pvalues = FALSE)

Arguments

n |

Sample size when simulating the distribution of the test statistics under independence. |

p |

Dimension of the data when simulating the distribution of the test statistics under independence. |

m |

Maximum cardinality of the subsets for which a test statistic

is to be computed. It makes sense to consider m << p especially when

p is large. In this case, the null hypothesis corresponds to the

independence of all sub-random vectors composed of at most m variables. |

N |

Number of repetitions when simulating under independence. |

x |

Data frame or data matrix containing realizations (one per line) of the random vector whose independence is to be tested. |

d |

Object of class empcop.distribution as returned by

the function empcop.simulate. It can be regarded as the empirical distribution

of the test statistics under independence. |

alpha |

Significance level used in the computation of the critical values for the test statistics. |

test |

Object of class empcop.test as return by he function empcop.test. |

pvalues |

Logical indicating whether the dependogram should be drawn from test statistics or the corresponding p-values. |

Details

See the references below for more details, especially the third one.Value

The functionempcop.simulate returns an object of class

empcop.distribution whose attributes are: sample.size,

data.dimension, max.card.subsets,

number.repetitons, subsets (list of the subsets for

which test statistics have been computed), subsets.binary

(subsets in binary 'integer' notation) and

dist.statistics.independence (a N line matrix containing

the values of the test statistics for each subset and each repetition).

The function

empcop.test returns an object of class

empcop.test whose attributes are: subsets,

statistics, critical.values, pvalues,

fisher.pvalue (a p-value resulting from a combination � la

Fisher of the previous p-values), tippett.pvalue (a p-value

resulting from a combination � la Tippett of the previous

p-values), alpha (global significance level of the test) and beta

(1 - beta is the significance level per statistic).Author(s)

Ivan Kojadinovic,ivan.kojadinovic@univ-nantes.fr

References

P. Deheuvels (1979), La fonction de d�pendance empirique et ses propri�t�s: un test non param�trique d'ind�pendance, Acad. Roy. Belg. Bull. Cl. Sci. 5th Ser. 65, 274-292.P. Deheuvels (1981), A non parametric test for independence, Publ. Inst. Statist. Univ. Paris 26, 29-50.

C. Genest and B. R�millard (2004). Tests of independence and randomness based on the empirical copula process. Test, 13, 335-369.

C. Genest, J.-F. Quessy and B. R�millard (2006). Local efficiency of a Cramer-von Mises test of independence. Journal of Multivariate Analysis, 97, 274-294.

C. Genest, J.-F. Quessy and B. R�millard (2007). Asymptotic local efficiency of Cramer-von Mises tests for multivariate independence. The Annals of Statistics, 35, in press.

Examples

## Consider the following example taken from

## Genest and Remillard (2004), p 352:

x <- matrix(rnorm(500),100,5)

x[,1] <- abs(x[,1]) * sign(x[,2] * x[,3])

x[,5] <- x[,4]/2 + sqrt(3) * x[,5]/2

## In order to test for independence "within" x, the first step consists

## in simulating the distribution of the test statistics under

## independence for the same sample size and dimension,

## i.e. n=100 and p=5. As we are going to consider all the subsets of

## {1,...,5} whose cardinality is between 2 and 5, we set m=5.

## This may take a while...

d <- empcop.simulate(100,5,5)

## The next step consists in performing the test itself:

test <- empcop.test(x,d,5)

## Let us see the results:

test

## Display the dependogram:

dependogram(test)

## We could have tested for a weaker form of independence, for instance,

## by only computing statistics for subsets whose cardinality is between 2

## and 3:

test <- empcop.test(x,d,3)

test

dependogram(test)

## Consider now the following data:

y <- matrix(runif(500),100,5)

## and perform the test:

test <- empcop.test(y,d,3)

test

dependogram(test)

## NB: In order to save d for future use, the save function can be used.

[Package copula version 0.5-3 Index]